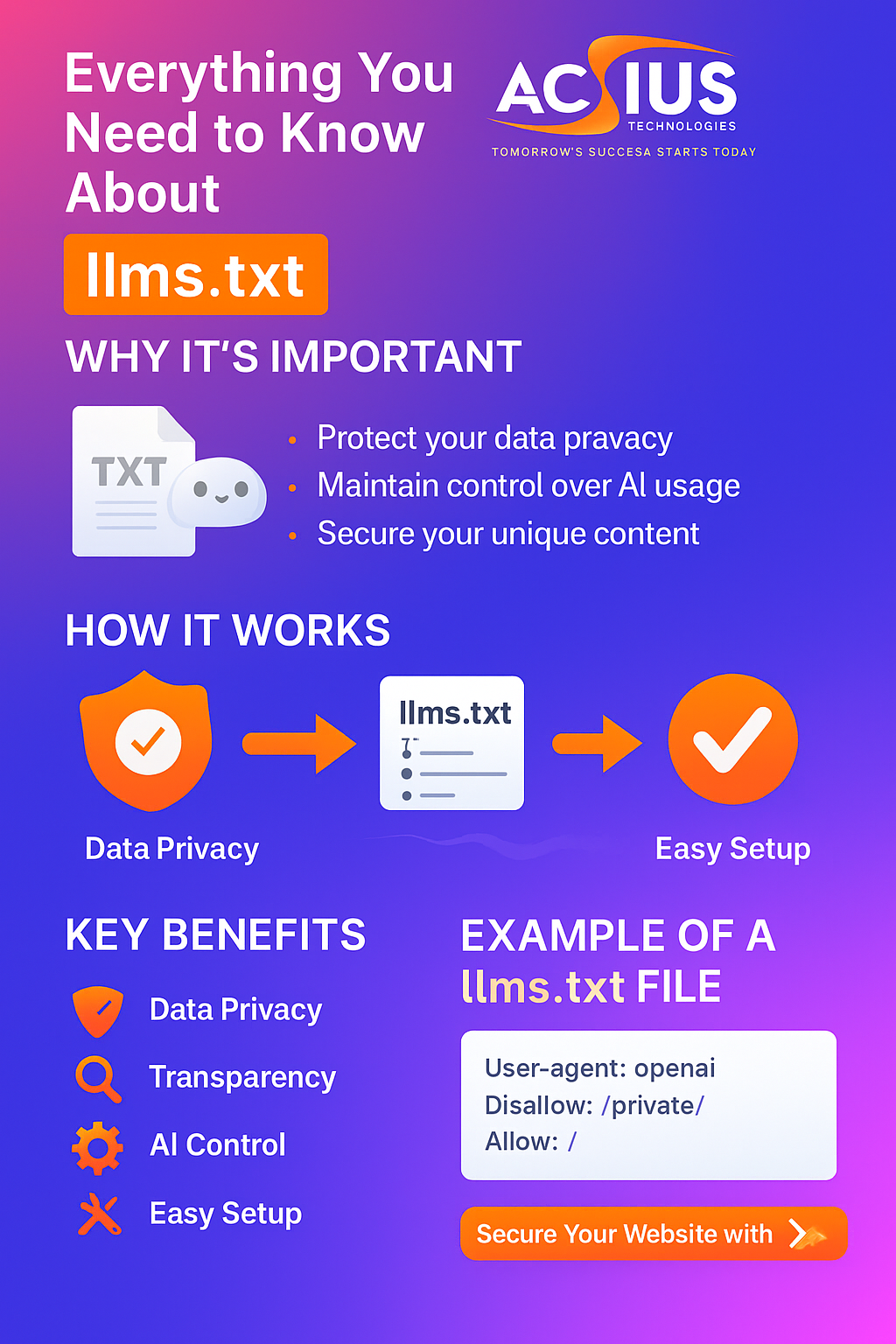

The internet is evolving fast, and artificial intelligence (AI) has become an integral part of how online data is accessed, analyzed, and used. Just like search engines use the robots.txt file to determine which parts of a website they can or cannot crawl, llms.txt (Large Language Model Systems text file) is a new web standard designed to tell AI crawlers and large language models (LLMs) — such as ChatGPT, Gemini, Claude, and others — what data they are allowed or restricted from accessing.

In simple terms, llms.txt gives website owners control over how AI systems interact with their content.

Why Was llms.txt Introduced?

AI companies are constantly training their models using huge amounts of web data. Many websites, however, do not want their content to be used for AI training or summarization without permission. To address this growing concern, the llms.txt file was introduced as an open standard to:

- Give website owners more control over their data.

- Ensure AI models respect data privacy and copyright laws.

- Promote transparency and consent-based AI data collection.

This file helps websites declare their AI usage policy, much like how robots.txt controls web crawlers like Googlebot.

How Does llms.txt Work?

The llms.txt file acts as a communication tool between your website and AI crawlers. When an AI bot visits your site, it first checks for the llms.txt file (usually placed at https://yourdomain.com/llms.txt).

It then reads the permissions and restrictions you’ve defined, such as:

User-agent: openai Disallow: /private/ Allow: /

This helps ensure that only authorized data is accessed by AI systems.

Benefits of Using llms.txt

- Data Privacy and Control: You decide what parts of your site can be used by AI models, protecting confidential or private data.

- Compliance and Transparency: Demonstrates ethical AI data practices and builds trust.

- Prevents Unauthorized AI Training: Stops AI models from using your content without consent.

- Brand and Content Protection: Keeps your creative and unique content safe from duplication.

- Easy to Implement: A simple text file—no technical complexity required.

What Should You Include in llms.txt?

- User-Agent: Specifies which AI crawler or model the rule applies to (e.g., openai, google-extended, anthropic).

- Allow/Disallow Rules: Define which sections of your site are accessible or restricted.

- Sitemap: (Optional) Directs crawlers to your public pages.

- Comments/Notes: You can include custom notes for reference.

Example of a Simple llms.txt File

# llms.txt for Example Business # Website: https://example.com User-agent: * Allow: / Disallow: /private/ Disallow: /internal/ Disallow: /confidential/ User-agent: openai Allow: / Disallow: /private/ User-agent: anthropic Disallow: / Sitemap: https://example.com/sitemap.xml

Where Should You Place llms.txt?

The file must be placed in the root directory of your website, such as:

https://www.acsius.com/llms.txt

Make sure it’s publicly accessible so AI crawlers can read it automatically.

How llms.txt Helps SEO and Content Strategy

While llms.txt doesn’t directly affect SEO, it complements your strategy by protecting your original content from misuse. When your unique content isn’t repurposed by AI bots, your website maintains its authority and authenticity.

For a digital marketing company like ACSIUS, implementing llms.txt ensures that client projects are both secure and compliant with modern data ethics.

ACSIUS Recommendation for Businesses

As a leading digital marketing and web development agency, ACSIUS recommends every website implement an llms.txt file for improved content protection and transparency. Our team ensures:

- Your data protection policies are accurately reflected.

- Access permissions are configured for major AI crawlers.

- Integration is smooth with your existing SEO structure.

Whether you manage an eCommerce platform, corporate website, or travel blog, llms.txt safeguards your online assets from unauthorized AI training.

How to Create Your Own llms.txt File

- Open a text editor like Notepad.

- Write your rules (User-agent, Allow, Disallow).

- Save the file as

llms.txt. - Upload it to your website’s root directory (

/public_html/). - Check by visiting

https://yourdomain.com/llms.txt.

FAQs About llms.txt

1. What is the difference between robots.txt and llms.txt?

robots.txt controls search engine crawlers, while llms.txt manages how AI models access or use your data.

2. Is llms.txt mandatory for websites?

No, it’s optional—but highly recommended for privacy, transparency, and copyright protection.

3. Will llms.txt affect my SEO?

Not directly. It doesn’t influence search rankings but supports your overall SEO by keeping your content original.

4. Do AI companies respect llms.txt?

Yes, major AI providers like OpenAI, Google, and Anthropic have confirmed they follow llms.txt directives.

5. Can ACSIUS help set up llms.txt?

Absolutely! ACSIUS offers complete website management, technical SEO, and llms.txt setup to ensure your data is secure and compliant.

Conclusion

The llms.txt file is a powerful step toward giving website owners control over how AI interacts with their data. As the digital landscape shifts toward AI-driven technology, implementing llms.txt ensures your content is protected, respected, and ethically used.

At ACSIUS Technologies Pvt. Ltd., we help businesses stay future-ready with advanced digital solutions that combine data security, SEO, and innovation.